The announcement this morning from augmented reality company Meta was as follows: “Meta has begun taking orders for the Meta 2 Development Kit, the first augmented reality (AR) product that delivers a totally immersive experience unlike any other AR product to date.”

It’s the typically breathless prose you expect from a press release, but as someone who has already experienced this product, I can guarantee you they are underselling the experience. But what is even more interesting than the ridiculously powerful hardware they have created are the ideas about where this product, and, in fact, AR in general, is going. So we sat down with Meta’s Chief Product Officer, Soren Harner, to really find out what is going on behind the (virtual) scene at this company.

So tell us about the mission of Meta?

We are hardware company and software company, but ultimately we are rethinking the user experience and in a way that brings it closer to humanity. And it may sound like fluff when we talk about neuroscience, but I think you started seeing a couple years ago that screens are a problem. And you can’t see a lot on them and they are not very portable so people started playing around with head-worn displays and that’s what augmented reality and virtual reality have grown out of. And we have taken it to the next step and really tried to integrate it into our senses and our nervous system.

So when we talk about building user interfaces, we are looking at what our founder calls the “neuro path of least resistance.” So what I look forward to is finally having a computer where I don’t have to be my parent’s customer support channel because the way they intuitively interact with it is the correct way it works, and is is discoverable, and they don’t have to learn. And the way that we see this happening is we bring the digital content, the hologram if you will, into the physical world.

What was it about your first Meta demo that made you want to join the company?

Well, there were wires coming out of it, and then when I put it on it hurt my nose and I had to move my glasses around but I was able to see a 3D object in front of me and a person standing behind it, and you could really get the feeling that this was going to be something that was going to be the next form factor shift.

So how does that help move the experience forward?

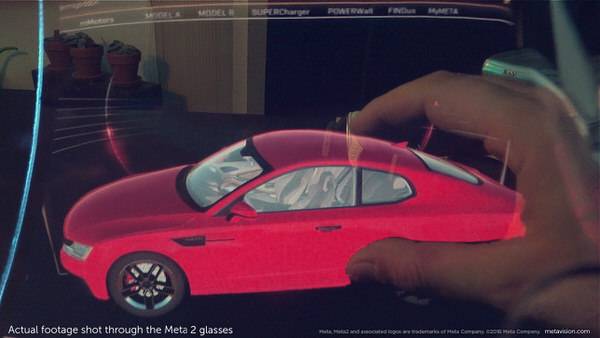

People talk about augmented reality and layering digital content on top of the world, but that doesn’t really give it justice. Doing it justice means you are thinking about anchoring it to the world, and it behaves like a physical object. So when you see it, and you are the person creating that experience, you understand the twelve or thirteen depth cues that all need to happen and align in order for that to feel real. And they understand that if you reach out and touch something and you couple that with being very realistic in term of how you are representing in addition to getting all the 3D cues right, you get that real sense of space.

Now the audio lines up with that as well and then you have all your senses telling you that that exists. And even though you don’t have haptics, you may still “feel” it. We see this as a collaboration device from the ground up, and when I can anchor digital content on a table, between us, we can have an incredible conversation about it because we can make eye contact because with the Meta 2 everything below the eyes is clear and transparent. And to give a very immersive wide field of view, we have ben able to create a see-through design that approaches the field of view of a VR device yet with more resolution. So you can, for instance, read text on it.

Who is the audience for this? What is the killer app?

So this is our second generation. Our first generation was kind of the “build it and they will come” sort of thing, and they did, which was great because we made fourteen hundred of them! So we sold out, which was good. And people were building all sorts of stuff. So we had everyone from a major aerospace company to hobbyists buying this, and we had people creating games and more than games people trying to solve real problems with it.

What was the most surprising thing you saw someone develop?

We saw a group in the Netherlands do surgery with it. They built a proof of concept to do jaw surgeries. That was surprising…and slightly worrisome! Not endorsed by us, but we did see lots of interesting use case scenarios, and we also realized what we needed to crack in the next version was a wider field of view. We had one that was about the same as a Microsoft HoloLens. And people struggled to get that sense of being real because things were being cut off. But if you could expand that like 90 degrees diagonal then suddenly you have a completely different ballgame because you have all this area to display content.

So we solved that problem, but the second problem was direct manipulation with the hands. And so we were talking before about the neuroscience, and we thought about how people reach out and grab something and how that integrates so you almost feel it; integrating all your senses so you get that when you reach out and directly grab it and manipulate it. You don’t get that through gestures so other folks with air taps and gestures like a thumbs up sign; you have to learn that and we wanted something you could use and interact with that just made sense.

And so that is this second generation of hands interaction we have built, so those are the two flagship features. And this all comes together in a collaborative environment. And being able to use the space between the two of you to interact and have a shared workspace.

So your examples have predicates in the real world. Are you seeing use cases that could only happen in this environment?

Well certainly if you get into being in two places at the same time. So being a remote collaborator and sharing documents and it is like something you could only do in augmented reality where you can put a virtual person in a chair like a virtual Skype. Being in two places at once is something you can’t normally do.

We call it the “Kingsman Effect” (after the movie) where you can all be in separate rooms but you have a table in front of you and the systems are communicating and you are putting he same digital content in front of everyone and then putting them in the room.

So, for IoT, this creates an incredible visual interface

Well, sensors give you superpowers. And IoT sensors, things like cameras that are reading the world and are connected together, and thermostats, why should they each have to have their own separate screen? So, unless you are a screen manufacturer that is not an optimal experience. But if you could just be wearing the screen, and you are in proximity and you can pick something up and tying it into your display device, that has huge potential.

With IoT we talk about having the OS built around them. This is our fundamental vision of augmented reality, to anchor you in the world, and this person is at the center of that feedback loop driving that. We talk about having access to rich types of information and that is about integrating you with information retrieval and that fundamentally is a machine learning type of process. It’s all about filtering, it’s all about anticipating information relevant to that moment.

Having to have twenty apps on your phone, that is because the app model is broken. As soon as you leave that screen real estate you have an expansive world where user interface elements could be anywhere in the environment. Then they have to be able to draw your attention to them when they are relevant, and that is fundamentally not the app model. It’s more if the sensor is over there, augmented reality makes it blink so it gets your attention when you look at it, and that’s the natural way to interact with it, not with an app. And that is the path everyone it taking. With the Meta 2 and the SDK, this is about creating the applications to try out exactly these types of things.