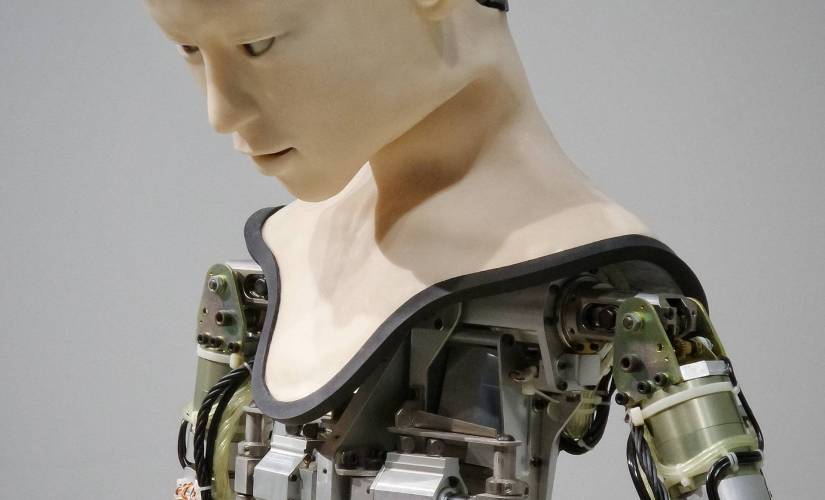

Artificial intelligence (AI) pioneer, Dr. Geoffrey Hinton, has made headlines recently as he departs from Google to openly express his concerns about the potential dangers of AI. With a career spanning half a century, Dr. Hinton has played a vital role in the development of AI technology, including the foundational work behind popular chatbots like ChatGPT. However, he now fears that the rapid advancement of AI could have serious consequences for society. Let’s delve into Dr. Hinton’s journey and his warnings about the risks associated with AI.

In 2012, Dr. Geoffrey Hinton and two of his graduate students at the University of Toronto created a groundbreaking technology that laid the intellectual groundwork for AI systems used by tech giants today. Their innovation became the foundation for generative AI, the technology powering popular chatbots like ChatGPT. Dr. Hinton’s work has been hailed as instrumental in shaping the future of AI.

Despite his pivotal role in AI development, Dr. Hinton has joined a growing chorus of critics who believe that companies are rushing headlong into dangerous territory with their aggressive pursuit of generative AI-based products. Concerns about the potential risks of AI have led him to leave his position at Google after more than a decade, allowing him to openly voice his worries.

Dr. Hinton acknowledges that his life’s work now brings him some regret, but he consoles himself with the thought that if he hadn’t pursued it, someone else would have. His concerns revolve around the potential misuse of AI, the erosion of job opportunities, and the long-term risks to humanity.

One of the immediate worries surrounding AI is its potential to become a tool for misinformation. Generative AI can already be used to create convincing fake photos, videos, and text. Dr. Hinton fears that the average person may struggle to discern what is true and what is not, as the internet becomes flooded with misleading content.

Furthermore, the advancement of AI technology could lead to significant job displacement. While current chatbots like ChatGPT complement human workers, they have the potential to replace professionals in various industries, including paralegals, personal assistants, and translators. Dr. Hinton expresses concern that AI’s impact may extend far beyond drudgery, potentially leaving many unemployed.

Looking into the future, Dr. Hinton warns that future iterations of AI technology could pose an even greater threat to humanity. As AI systems analyze vast amounts of data, they have the potential to learn unexpected behaviors. This becomes particularly concerning when individuals and companies allow AI systems not only to generate their own code but also to run that code autonomously.

The idea that AI could surpass human intelligence was once considered a distant possibility. However, Dr. Hinton now believes that the rapid progress of AI technology brings this prospect closer than ever before. He expresses particular concern about the development of truly autonomous weapons, which he refers to as “killer robots.”

In response to the potential risks associated with AI, various groups and individuals have called for regulation and collaboration within the industry. OpenAI, a San Francisco-based startup, released a new version of ChatGPT earlier this year, prompting over 1,000 technology leaders and researchers to sign an open letter advocating for a six-month moratorium on the development of new AI systems. The letter emphasizes the profound risks that AI technologies pose to society and humanity.

Dr. Hinton believes that the competition between tech giants like Google and Microsoft will escalate into a global race that cannot be stopped without some form of global regulation. However, he acknowledges that regulating AI may be challenging, as there is no way to determine if companies or countries are secretly working on potentially dangerous AI technologies.

To address these concerns, Dr. Hinton believes that leading scientists worldwide must collaborate to develop methods for controlling AI technology before it is further scaled up. He stresses the importance of understanding how to control AI systems effectively before they become more pervasive in society.

First reported on New York Times